In CRBLM Research Spotlight articles, we provide accessible summaries to highlight current and ongoing work being produced by our members on various themes.

New Methods In Auditory Neuroscience

Every scientist needs tools to make their discoveries. Better telescopes let us see more galaxies, mass spectrometers reveal the chemical makeup of substances, particle colliders let us observe matter in extreme situations, and gene-editing allows us to see what happens when an organism lacks a specific gene. What, then, are the methods that scientists use to study subjects like hearing, music, and speech? What new tools are being created or refined? We interviewed CRBLM members who have recently created or used new methods.

Many new tools in health and psychology consist of test batteries: perception tests or questionnaires to help us measure some aspect of human cognition. In the domain of music, Coffey and colleagues created one such test to measure how background noise affected people’s music perception. It was modeled after other hearing-in-noise tests designed for speech. “We used simple musical stimuli that are not reliant on linguistic knowledge (so we can use it in kids and non-native speakers), yet also not on advanced musical training,” Coffey says. Functional hearing is an important component of overall health, and these tests go beyond traditional hearing tests to better simulate everyday situations, including non-speech sounds. As Coffey noted, “There is some evidence from longitudinal studies that musical training plays a causal role in the better [hearing in noise] scores we observe in musicians… For those reasons we think it’s worth studying, and with this task it’s easy to add it to other studies that have other main research questions.”

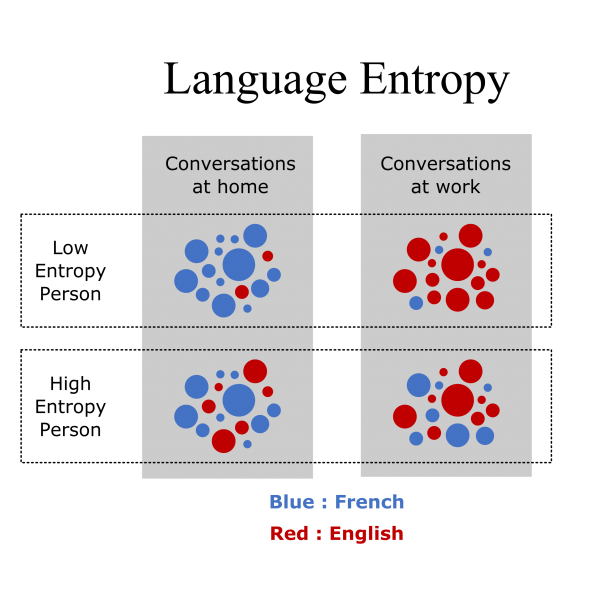

In the domain of language, Gullifer and Titone used questionnaire data to measure a new concept called language entropy—how separate or mixed a multilingual person’s language experience is. A person with low entropy might speak one language exclusively at work and another language at home, with little overlap. In contrast, a person with high entropy might constantly be switching back and forth between languages, no matter the situation. “Researchers tend to use (more or less) standardized questionnaires that ask about the frequency of language usage. So we were able to capitalize on these existing questionnaires to compute entropy,” Gullifer says. Differences in language entropy may change the way that multilinguals process their languages, and how their brain deals with uncertainty more generally. “This was a situation where peer review totally helped in honing the study and methodological approach,” Gullifer says, mentioning that reviewers’ comments sent them “back to the drawing board” but ultimately resulted in a much more elegant measure.

One tenet of scientific reasoning is that correlation and causation are not the same. To provide evidence that event A causes event B, it isn’t enough to show that they occur together; rather, we need to show that changing A in an experiment, without changing anything else, will produce B. In other words, we need to be able to isolate and manipulate different aspects of the system we are studying.

A number of studies by CRBLM members involve isolating and manipulating different parts of the auditory experience. Alkhateeb and colleagues developed a surgical technique to cause temporary hearing loss in rats, which opens the door for studying the effects of hearing loss in a more controlled way. James and colleagues likewise controlled the early sensory experiences of songbirds in a number of different ways to try and understand how early hearing experiences affect vocal learning. They were able to compare un-tutored zebra finches, deaf finches, and finches tutored by a computer that gave randomized sequences of chirps. Amazingly, there were some commonalities between the mature songs of all the birds, despite having such different upbringings. James and colleagues could therefore suggest that there are certain patterns innate to these birds because of the way their brains and bodies are structured. “Interestingly, some of these patterns are similar to those that are common in human speech and music,” says James, adding that the finches “tend to produce longer song elements at the ends of their song phrases, which is similar to utterance final elongation that happens in human speech… finches with impoverished auditory experiences in development continued to produce songs with these common patterns.”

In humans, we can disrupt the normally tight connection between speech production and sensory feedback to see how people adapt. Barbier and colleagues inserted a retainer-like mold into the mouths of participants, and then monitored how they altered their speech to try and produce the same sounds as before. People make the adjustments quite rapidly, “but it really doesn’t return to 100% normal even after 30 minutes of intense practice,” says senior author Shiller (if you have ever had braces or a retainer, you will be intimately aware of this fact). Sares and colleagues, on the other hand, manipulated the way participants heard their own speech, making their vocal feedback sound like it was higher or lower in pitch, and seeing how they corrected for this while scanning their brain with fMRI. Individuals who stuttered had a different neural response to the shifted auditory feedback than fluent speakers, and they also seemed to have less connectivity between their auditory and motor cortices during the task.

The saying that “the eyes are the window to the soul,” while being poetic, also has some scientific merit—something that researchers are taking advantage of. We can observe the eyes to get clues about what is going on in the brain; for instance, tracking eyes as they move and focus can also give us an idea of what a person is paying attention to. “Eye movement measures are unrivalled for studying the moment-by-moment processes involved in reading, as pioneered back in the day by Keith Rayner and colleagues,” says Dr. Titone, who uses the technique to study bilingualism. One of her recent papers with graduate student Pauline Palma measured the amount of time that French-English bilinguals spent on ambiguous words in a sentence. These words could have multiple meanings depending on the context (like sage, the plant, or sage, a wise person, which is less common in English). Some of the secondary meanings were also cognates in the participants other language (sage in French also means a wise person). Participants spent more time gazing at an ambiguous word when the less common meaning was a cognate, which the authors interpreted as cross-linguistic interference.

The dilation of the pupil can also be very informative: it can indicate that that something is pleasurable, effortful, fearful, or cognitively rewarding. In other words, pupil dilation communicates mental arousal. Bianco and colleagues recently showed that the pupil responds to both predictability and pleasure during music listening, and that these two factors interact. “We were really interested in what motivates people to practice,” says senior author Penhune. “When you think about it, practice can be boring, it can be frustrating… so why do people keep doing it? What we wanted was a more objective measure of hedonic response [i.e. pleasure] … so we decided to try pupillometry.”

One of the current challenges for pupillometry is that a dilation of the pupil can mean many things. Zhang and colleagues have been using it in tests of auditory memory—in this case, a dilation of the pupil would not always be associated with enjoyment or motivation, but could sometimes reflect difficulty, increased effort, and heavy cognitive load. Zhang says, “It has a lot of potential to be translated to a clinical tool that can quantify the effortful listening experience hearing aid and cochlear implant users typically report,” but also warns: “we still have a lot of questions unanswered, especially when it comes to realistic and complex situations.” In response to this apparent conflict between pleasure and cognitive effort, Penhune says: “Probably, it’s good to have a little bit of attentional focus (i.e. a little bit of dilation), but perhaps beyond that… you’re overloading.”

Scientists have been able to measure the electrical activity produced by the brain for some time, but new applications and analysis methods allow us to see further than before. Zamm and colleagues used a wireless EEG system from mBrainTrain to record the brain activity of piano players while they played. Wireless EEG is a newer technology for music neuroscience; the lack of the restrictive wires makes it ideal to study performers as they move expressively. The authors were able to replicate EEG signatures observed with traditional systems, specifically motor and auditory responses time-locked to musicians’ movements, as well as brain oscillations that reflect an individual musician’s playing rate.

Zamm sees wireless EEG as an advantage for measuring natural music performance, but says: “The possibility for free movement also gives rise to potentially more artefacts from muscle activity.”

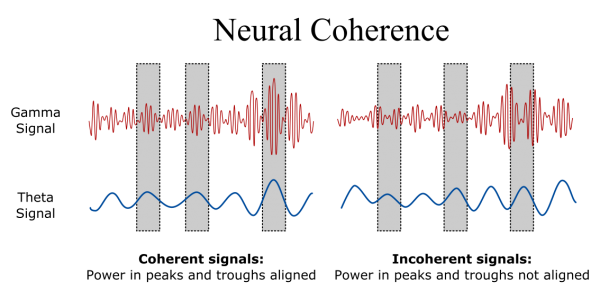

Traditional EEG is also being improved upon, mostly by finding novel analysis techniques. Sengupta and colleagues used a type of analysis called neural phase coherence, which measures how synchronized neuronal signals are in different parts of the brain over time. They used this during a speech production task and showed that, for individuals who stutter, pre-speech brainwaves in the theta and gamma range were not well-synchronized with each other, compared to fluent speakers.

Machine learning looms large in the public consciousness right now, and it is enjoying a number of applications in speech science. For example, an algorithm might help a speech pathologist analyze the vocal health of their patient based on recordings outside the clinic, as Lei and colleagues suggest. “Deviated vocal qualities, such as breathy and pressed voice, are associated with the presence of vocal pathologies (e.g., vocal polyp and nodules), vocal fatigue or some maladaptive vocal behaviors,” Lei explains, “We would like to develop a machine learning algorithm that can automatically classify and identify healthy vs unhealthy voice types for medical voice diagnosis and monitoring purposes.” For this, they created a wearable device that records neck vibration signals. It is an improvement over using audio recordings since it is less prone to interfering background noise, and it also protects the patient’s privacy since it doesn’t pick up words. First author Lei mentioned that the device and the algorithm could be useful to diagnose vocal health problems “in many occupational voice users, such as voice actors, singers, teachers, and coaches.”

Zuidema and colleagues highlight computational approaches to artificial grammar learning in a recent review. This field is focused on understanding how people and other nonhuman animals communicate by having them learn made-up languages or studying how they sequence their calls. Using computational models can lead to new insights and beautiful depictions of data, like this image plotting different syllables in bird songs.

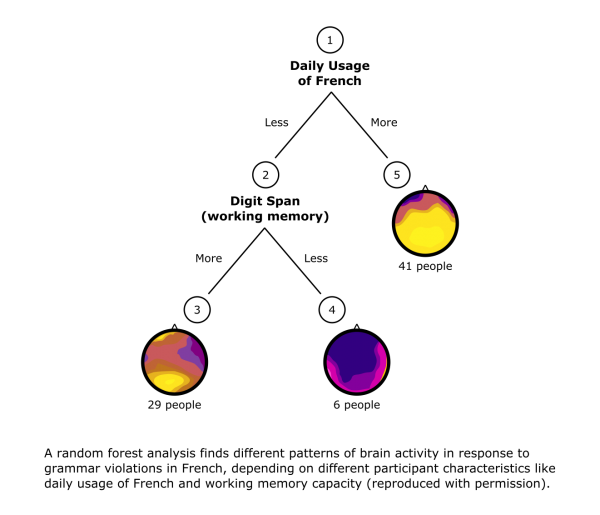

One specific machine learning technique that is seeing a lot of use is random forests: a classification algorithm that uses branching decision trees based on different input factors to predict a final outcome. James and colleagues (mentioned above) used random forests to analyze their songbird data. “What drew us to random forests in particular was the ability to calculate an ‘importance’ score for each of the [input] features that we measured,” James explained. For example, the duration of a birdsong element strongly predicted its position the bird’s “phrase” (beginning, middle, or end).

Fromont and colleagues applied random forests in a different context: EEG recordings of people hearing French sentences with grammar violations. They found that proficiency in French and exposure to the language was more important than age of acquisition when it came to classifying the EEG responses, in contrast to other theories that emphasize early learning. Fromont was most excited about the advantages of random forests when it comes to correlated predictors: “When doing second language research, many factors that we take into account are correlated with one another. For example, proficiency in a second language tends to correlate with age of acquisition or exposure. The purpose of our research is precisely to try to tease these variables apart…random forests allow you to do that.”

CLICK HERE FOR MORE CRBLM RESEARCH SPOTLIGHT POSTS

References

- New Test Batteries and Questionnaires

- Coffey EBJ, Arseneau-Bruneau I, Zhang X, & Zatorre RJ. (2019). The Music-In-Noise Task (MINT): a tool for dissecting complex auditory perception. Frontiers in Neuroscience, 13.

- Gullifer JW & Titone D. (2019). Characterizing the Social Diversity of Bilingualism with Entropy. Bilingualism: Language & Cognition.

- Manipulating Perception

- Alkhateeb A, Voss P, Zeitouni A, & de-Villers-Sidani E. (2019). Reversible external auditory canal ligation (REACL): A novel surgical technique to induce transient and reversible hearing loss in developing rats. J Neurosci Methods. 2: 1-6.

- James LS, Davies R Jr, Mori C, Wada K, & Sakata JT. (2021). Manipulations of sensory experiences during development reveal mechanisms underlying vocal learning biases in zebra finches. Developmental Neurobiology.

- Barbier G, Baum SR, Ménard L, & Shiller, DM. (2020). Sensorimotor adaptation across the speech production workspace in response to a palatal perturbation. The Journal of the Acoustical Society of America, 147(2), 1163–1178.

- Sares AG, Deroche MLD, Ohashi H, Shiller DM, Gracco VL. (2020). Neural Correlates of Vocal Pitch Compensation in Individuals Who Stutter. Front Hum Neurosci. 2020;14:18.

- Eye tracking & Pupillometry

- Palma P, Whitford V, & Titone D. (2019). Cross-language activation and executive control modulate within-language ambiguity resolution: evidence from eye movements. Journal of Experimental Psychology: Learning, Memory & Cognition.

- Bianco R, Gold BP, Johnson AP & Penhune VB. (2019). Predictability and liking of music interact during listening and facilitate motor learning: evidence from pupil and motor responses in non-musicians. Scientific Reports 9:17060.

- Measuring Brainwaves

- Zamm A, Palmer C, Bauer AKR, Bleichner MG, Demos AP, & Debener S. (2019). Synchronizing MIDI and wireless EEG measurements during natural piano performance. Brain Research, 1716, 27-38.

- Sengupta R, Yaruss JS, Loucks TM, Gracco VL, Pelczarski K, & Nasir SM. Theta Modulated Neural Phase Coherence Facilitates Speech Fluency in Adults Who Stutter. Front Hum Neurosci. 2019;13:394. Published 2019 Nov 19.

- Computational Techniques

- Lei ZD, Kennedy E, Fasanella L, Li-Jessen, NYK, & Mongeau, L. (2019). Discrimination between modal, breathy, and pressed voice for single vowels using neck-surface vibration signals. Applied Sciences, 9(7), 1505.

- Zuidema W, French RM, Alhama RG, Ellis K, O’Donnell TJ, Sainburg T, & Gentner TQ. (2019). Five Ways in Which Computational Modeling Can Help Advance Cognitive Science: Lessons From Artificial Grammar Learning. Top Cogn Sci. 2019;10.1111/tops.12474.

- Fromont LA, Royle P, & Steinhauer K. (2020). Growing Random Forests reveals that exposure and proficiency best account for individual variability in L2 (and L1) brain potentials for syntax and semantics. Brain and Language, 2020:204;104770.